From Relational Roots to Real-time Riches

From Relational Roots to Real-time Riches

Yet to come

Charting the Evolution of the Data Landscape (2001-Present)

The Early 2000s: The Reign of Relational Databases (2001-2007)

In the early 2000s, the data landscape was largely synonymous with Relational Database Management Systems (RDBMS). Titans like Oracle, SQL Server, and DB2 powered most transactional systems and early data warehousing efforts. Data lived in highly structured tables, and SQL was the lingua franca for querying and manipulation.

[Psst.. DB2 Still give me chills 2008-2012, finally accommodated that as well]

During this period, the focus for data was on mastering SQL (DML & DDL), understanding database normalization, and optimizing queries for performance. The core activity revolved around ETL (Extract, Transform, Load) processes, often custom-scripted or using rudimentary tools, to pull data from operational systems into data marts for reporting. Foundational data modeling skills were paramount, as was the ability to build robust, scalable data pipelines for internal reporting needs, laying the groundwork for what would become sophisticated analytics.

With limited Tools and Technologies, somehow the Data Crafts were produced, I would rather say hand-crafted, with chisel and knives.. (although great technologies of the time, chisel-knives in relative terms/comparing to modern stack, with due respects)

The Late 2000s: The Rise of Data Warehousing & BI Tools (2007-2012)

As businesses grew, so did their data volume and the demand for more sophisticated insights. This era saw the maturation of Data Warehousing concepts, with dedicated platforms designed for analytical processing. We started seeing widespread adoption of specialized Business Intelligence (BI) tools like Cognos, Business Objects, and eventually Tableau and QlikView, moving beyond basic reporting to interactive dashboards and deeper analysis.

At this stage, the emphasis for data professionals shifted towards designing and implementing more complex data warehouse architectures, often employing Kimball methodologies for dimensional modeling. Proficiency in performance tuning massive data loads and optimizing queries for large datasets became crucial. Data engineering skills expanded to include working with specialized ETL tools and frameworks. Critically, the role in reporting and analytics evolved; it wasn't just about building reports, but working closely with stakeholders to define key performance indicators (KPIs) and build interactive dashboards that empowered self-service analytics, truly bridging the gap between raw data and actionable business insights.

[Second thought, inclusion of Data Marts, as stepping-stone towards the Warehouse, easier to adapt, implement and discuss lightly; Warehouse still was a heavy term (relatively)]

The Early 2010s: Big Data and NoSQL Emerge (2012-2017)

The explosion of data, social media, and unstructured information challenged the limitations of traditional relational databases. This period marked the advent of "Big Data" with the rise of Hadoop and its ecosystem (MapReduce, HDFS), alongside various NoSQL databases (MongoDB, Cassandra, etc.). The emphasis shifted from strict schemas to flexibility and massive scalability, often at the expense of ACID compliance.

This was an exhilarating time for the data community. Data professionals embraced new technologies, learning Hadoop ecosystem tools and exploring the nuances of NoSQL data modeling. Data engineering skills broadened to handle distributed computing environments and process semi-structured and unstructured data. Concepts of streaming data began to emerge, recognizing the need for more real-time insights. For reporting and analytics, this meant dealing with increasingly diverse data sources, often blending structured and unstructured data to provide a holistic view and extract value from previously untapped data streams.

The Late 2010s: Cloud, Data Lakes, and the Modern Data Stack (2017-2022)

The latter half of the 2010s witnessed a seismic shift towards the cloud. AWS, Azure, and GCP became dominant forces, offering scalable and cost-effective alternatives to on-premise infrastructure. This led to the proliferation of Data Lakes (storing raw data in its native format) and the emergence of managed data services, transforming how we store, process, and analyze data. The concept of the "Modern Data Stack" began to solidify, characterized by cloud-native tools, open-source technologies, and modular architectures.

Expertise deepened significantly during this period. Data professionals became highly proficient in cloud data platforms (e.g., AWS S3, Redshift, Glue; Azure Data Lake Storage, Synapse; GCP BigQuery, Dataflow). Data engineering work transitioned to building robust, automated data pipelines using cloud-native services and orchestrators like Apache Airflow. Key roles emerged in designing and implementing data lake architectures and establishing robust data governance practices. For reporting and analytics, this meant leveraging the immense processing power of cloud data warehouses and integrating with advanced BI tools, enabling near real-time dashboards and predictive analytics capabilities. The importance of data quality automation also became a critical focus, understanding that reliable insights stem from trustworthy data.

Today: Real-time, AI/ML, and Data Mesh (2022-Present)

We are now in an era defined by the demand for real-time insights, the pervasive influence of Artificial Intelligence and Machine Learning, and the emerging paradigm of Data Mesh. Data is no longer just for historical analysis; it's the fuel for operational decisions, predictive models, and intelligent applications. Data governance and data observability have become paramount.

In the current landscape, data engineering skills are centered around building sophisticated streaming data pipelines using technologies like Kafka, Flink, and Spark Streaming. There's a strong emphasis on preparing and serving data for AI/ML models, often requiring close collaboration with data scientists. Reporting and analytics capabilities have expanded to include setting up robust data observability frameworks, ensuring data quality and lineage, and implementing advanced analytics solutions that power machine learning applications. Concepts like Data Mesh are gaining traction, advocating for domain-oriented data ownership and self-serve data capabilities. The focus today is not just on moving data, but on making it discoverable, trustworthy, and actionable for everyone, bridging the gap between raw data and true business intelligence at scale.

This journey, from mastering SQL in relational databases to orchestrating complex cloud-native data pipelines for AI/ML, has been nothing short of transformative. It's a testament to the dynamic nature of data and the continuous innovation within the field. The future of data promises even more exciting advancements, and it's fascinating to witness how the landscape continues to evolve.

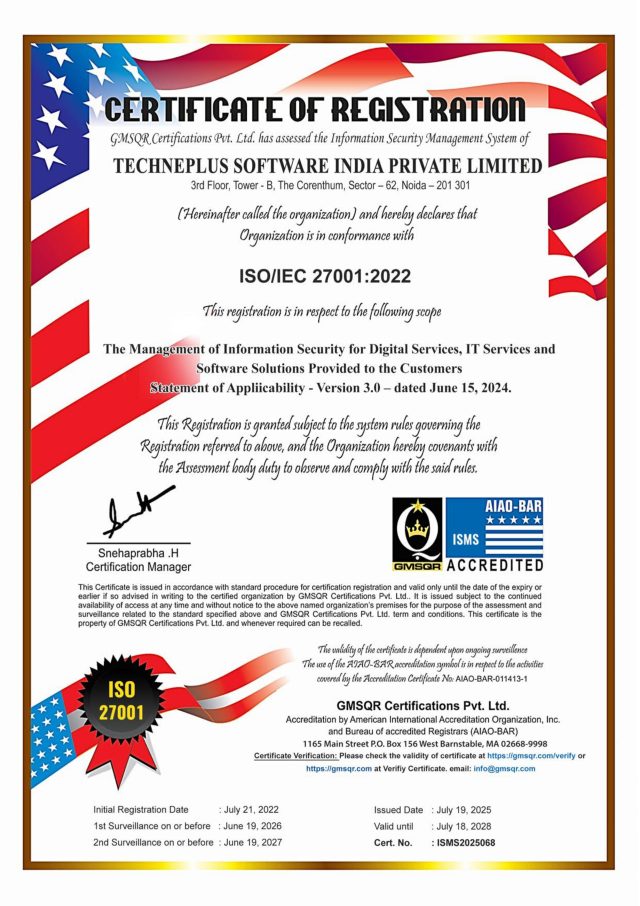

This dynamic evolution in the data landscape is precisely where the TechnePlus Delivery COE on Data practice excels. Our Data COE is at the forefront of this revolution, consistently working on complex and innovative solutions across the entire modern data stack. From architecting scalable cloud data platforms and designing intricate real-time streaming pipelines to implementing cutting-edge AI/ML-driven analytics and robust data governance frameworks, our expertise spans the full spectrum. We leverage the latest tools and methodologies to empower clients across multiple industry domains – be it BFSI, manufacturing, healthcare, or logistics – by transforming their raw data into actionable intelligence, driving strategic decisions, and delivering tangible business value in today's increasingly data-driven world.

#DataCOE #DataInnovation

Mohit Geherwar

Global Technology, Digital Transformation, Client Acquisitions & Success | Digital Evangelist - Converting ideas into reality at TechnePlus

The Early 2000s: The Reign of Relational Databases (2001-2007)

In the early 2000s, the data landscape was largely synonymous with Relational Database Management Systems (RDBMS). Titans like Oracle, SQL Server, and DB2 powered most transactional systems and early data warehousing efforts. Data lived in highly structured tables, and SQL was the lingua franca for querying and manipulation.

[Psst.. DB2 Still give me chills 2008-2012, finally accommodated that as well]

During this period, the focus for data was on mastering SQL (DML & DDL), understanding database normalization, and optimizing queries for performance. The core activity revolved around ETL (Extract, Transform, Load) processes, often custom-scripted or using rudimentary tools, to pull data from operational systems into data marts for reporting. Foundational data modeling skills were paramount, as was the ability to build robust, scalable data pipelines for internal reporting needs, laying the groundwork for what would become sophisticated analytics.

With limited Tools and Technologies, somehow the Data Crafts were produced, I would rather say hand-crafted, with chisel and knives.. (although great technologies of the time, chisel-knives in relative terms/comparing to modern stack, with due respects)

The Late 2000s: The Rise of Data Warehousing & BI Tools (2007-2012)

As businesses grew, so did their data volume and the demand for more sophisticated insights. This era saw the maturation of Data Warehousing concepts, with dedicated platforms designed for analytical processing. We started seeing widespread adoption of specialized Business Intelligence (BI) tools like Cognos, Business Objects, and eventually Tableau and QlikView, moving beyond basic reporting to interactive dashboards and deeper analysis.

At this stage, the emphasis for data professionals shifted towards designing and implementing more complex data warehouse architectures, often employing Kimball methodologies for dimensional modeling. Proficiency in performance tuning massive data loads and optimizing queries for large datasets became crucial. Data engineering skills expanded to include working with specialized ETL tools and frameworks. Critically, the role in reporting and analytics evolved; it wasn't just about building reports, but working closely with stakeholders to define key performance indicators (KPIs) and build interactive dashboards that empowered self-service analytics, truly bridging the gap between raw data and actionable business insights.

[Second thought, inclusion of Data Marts, as stepping-stone towards the Warehouse, easier to adapt, implement and discuss lightly; Warehouse still was a heavy term (relatively)]

The Early 2010s: Big Data and NoSQL Emerge (2012-2017)

The explosion of data, social media, and unstructured information challenged the limitations of traditional relational databases. This period marked the advent of "Big Data" with the rise of Hadoop and its ecosystem (MapReduce, HDFS), alongside various NoSQL databases (MongoDB, Cassandra, etc.). The emphasis shifted from strict schemas to flexibility and massive scalability, often at the expense of ACID compliance.

This was an exhilarating time for the data community. Data professionals embraced new technologies, learning Hadoop ecosystem tools and exploring the nuances of NoSQL data modeling. Data engineering skills broadened to handle distributed computing environments and process semi-structured and unstructured data. Concepts of streaming data began to emerge, recognizing the need for more real-time insights. For reporting and analytics, this meant dealing with increasingly diverse data sources, often blending structured and unstructured data to provide a holistic view and extract value from previously untapped data streams.

The Late 2010s: Cloud, Data Lakes, and the Modern Data Stack (2017-2022)

The latter half of the 2010s witnessed a seismic shift towards the cloud. AWS, Azure, and GCP became dominant forces, offering scalable and cost-effective alternatives to on-premise infrastructure. This led to the proliferation of Data Lakes (storing raw data in its native format) and the emergence of managed data services, transforming how we store, process, and analyze data. The concept of the "Modern Data Stack" began to solidify, characterized by cloud-native tools, open-source technologies, and modular architectures.

Expertise deepened significantly during this period. Data professionals became highly proficient in cloud data platforms (e.g., AWS S3, Redshift, Glue; Azure Data Lake Storage, Synapse; GCP BigQuery, Dataflow). Data engineering work transitioned to building robust, automated data pipelines using cloud-native services and orchestrators like Apache Airflow. Key roles emerged in designing and implementing data lake architectures and establishing robust data governance practices. For reporting and analytics, this meant leveraging the immense processing power of cloud data warehouses and integrating with advanced BI tools, enabling near real-time dashboards and predictive analytics capabilities. The importance of data quality automation also became a critical focus, understanding that reliable insights stem from trustworthy data.

Today: Real-time, AI/ML, and Data Mesh (2022-Present)

We are now in an era defined by the demand for real-time insights, the pervasive influence of Artificial Intelligence and Machine Learning, and the emerging paradigm of Data Mesh. Data is no longer just for historical analysis; it's the fuel for operational decisions, predictive models, and intelligent applications. Data governance and data observability have become paramount.

In the current landscape, data engineering skills are centered around building sophisticated streaming data pipelines using technologies like Kafka, Flink, and Spark Streaming. There's a strong emphasis on preparing and serving data for AI/ML models, often requiring close collaboration with data scientists. Reporting and analytics capabilities have expanded to include setting up robust data observability frameworks, ensuring data quality and lineage, and implementing advanced analytics solutions that power machine learning applications. Concepts like Data Mesh are gaining traction, advocating for domain-oriented data ownership and self-serve data capabilities. The focus today is not just on moving data, but on making it discoverable, trustworthy, and actionable for everyone, bridging the gap between raw data and true business intelligence at scale.

This journey, from mastering SQL in relational databases to orchestrating complex cloud-native data pipelines for AI/ML, has been nothing short of transformative. It's a testament to the dynamic nature of data and the continuous innovation within the field. The future of data promises even more exciting advancements, and it's fascinating to witness how the landscape continues to evolve.

This dynamic evolution in the data landscape is precisely where the TechnePlus Delivery COE on Data practice excels. Our Data COE is at the forefront of this revolution, consistently working on complex and innovative solutions across the entire modern data stack. From architecting scalable cloud data platforms and designing intricate real-time streaming pipelines to implementing cutting-edge AI/ML-driven analytics and robust data governance frameworks, our expertise spans the full spectrum. We leverage the latest tools and methodologies to empower clients across multiple industry domains – be it BFSI, manufacturing, healthcare, or logistics – by transforming their raw data into actionable intelligence, driving strategic decisions, and delivering tangible business value in today's increasingly data-driven world.

#DataCOE #DataInnovation

Mohit Geherwar

Global Technology, Digital Transformation, Client Acquisitions & Success | Digital Evangelist - Converting ideas into reality at TechnePlus